Facebook parent Meta is seeing a “rapid rise” in fake profile photos generated by artificial intelligence.

Publicly available technology like “generative adversarial networks” (GAN) allows anyone — including threat actors — to create eerie deepfakes, producing scores of synthetic faces in seconds.

These are “basically photos of people who do not exist,” said Ben Nimmo, Global Threat Intelligence lead at Meta. “It’s not actually a person in the picture. It’s an image created by a computer.”

“More than two-thirds of all the [coordinated inauthentic behavior] networks we disrupted this year featured accounts that likely had GAN-generated profile pictures, suggesting that threat actors may see it as a way to make their fake accounts look more authentic and original,” META revealed in public reporting, Thursday.

Investigators at the social media giant “look at a combination of behavioral signals” to identify the GAN-generated profile photos, an advancement over reverse-image searches to identify more than only stock photo profile photos.

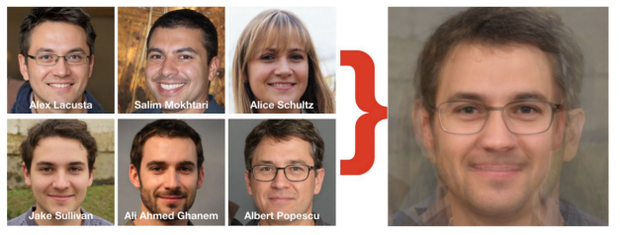

Meta has shown some of the fakes in a recent report. The following two images are among several that are fake. When they’re superimposed over each other, as shown in the third image, all of the eyes align exactly, revealing their artificiality.

Meta

Meta

Meta/Graphika

Those trained to spot mistakes in AI images are quick to notice not all AI images appear picture-perfect: some have telltale melted backgrounds or mismatched earrings.

Meta

“There’s a whole community of open search researchers who just love nerding out on finding those [imperfections,]” Nimmo said. “So what threat actors may think is a good way to hide is actually a good way to be spotted by the open source community.”

But increased sophistication of generative adversarial networks that will soon rely on algorithms to produce content indistinguishable from that produced by humans has created a complicated game of whack-a-mole for the social media’s global threat intelligence team.

Since public reporting began in 2017, more than 100 countries have been the target of what Meta refers to as “coordinated inauthentic behavior” (CIB).Meta said the term refers to “coordinated efforts to manipulate public debate for a strategic goal where fake accounts are central to the operation.”

Since Meta first began publishing threat reports just five years ago, the tech company has disrupted more than 200 global networks – spanning 68 countries and 42 languages – that it says violated policy. According to Thursday’s report, “the United States was the most targeted county by global [coordinated inauthentic behavior] operations we’ve disrupted over the years, followed by Ukraine and the United Kingdom.”

Russia led the charge as the most “prolific” source of coordinated inauthentic behavior, according to Thursday’s report with 34 networks originating from the country. Iran (29 networks) and Mexico (13 networks) also ranked high among geographic sources.

“Since 2017, we’ve disrupted networks run by people linked to the Russian military and military intelligence, marketing firms and entities associated with a sanctioned Russian financier,” the report indicated. “While most public reporting has focused on various Russian operations targeting America, our investigations found that more operations from Russia targeted Ukraine and Africa.”

“If you look at the sweep of Russian operations, Ukraine has been consistently the single biggest target they’ve picked on,” said Nimmo, even before the Kremlin’s invasion. But the United States also ranks among the culprits in violation of Meta’s policies governing coordinated online influence operations.

Last month, in a rare attribution, Meta reported individuals “associated with the US military” promoted a network of roughly three dozen Facebook accounts and two dozen Instagram accounts focused on U.S. interests abroad, zeroing in on audiences in Afghanistan and Central Asia.

Nimmo said last month’s removal marks the first takedown associated with the U.S. military relied on a “range of technical indicators.”

“This particular network was operating across a number of platforms, and it was posting about general events in the regions it was talking about,” Nimmo continued. “For example, describing Russia or China in those areas.” Nimmo added that Meta went “as far as we can go” in pinning down the operation’s connection to the U.S. military, which did not cite a particular service branch or military command.

The report revealed that the majority — two-thirds —of coordinated inauthentic behavior removed by Meta “most frequently targeted people in their own country.” Top among that group include government agencies in Malaysia, Nicaragua, Thailand and Uganda who were found to have targeted their own population online.

The tech behemoth said it’s working with other social media companies to expose cross-platform information warfare.

“We’ve continued to expose operations running on many different internet services at once, with even the smallest networks following the same diverse approach,” Thursday’s report noted. “We’ve seen these networks operate across Twitter, Telegram, TikTok, Blogspot, YouTube, Odnoklassniki, VKontakte, Change[.]org, Avaaz, other petition sites and even LiveJournal.”

But critics say these kinds of collaborative takedowns are too little, too late. In a scathing rebuke, Sacha Haworth, executive director of the Tech Oversight Project called the report “[not] worth the paper they’re printed on.”

“By the time deepfakes or propaganda from malevolent foreign state actors reaches unsuspecting people, it’s already too late,” Haworth told CBS News. “Meta has proven that they are not interested in altering their algorithms that amplify this dangerous content in the first place, and this is why we need lawmakers to step up and pass laws that give them oversight over these platforms.”

Last month, a 128-page investigation by the Senate Homeland Security Committee and obtained by CBS News alleged that social media companies, including Meta, are prioritizing user engagement, growth, and profits over content moderation.

Meta reported to congressional investigators that it “remove[s] millions of violating posts and accounts every day,” and its artificial intelligence content moderation blocked 3 billion phony accounts in the first half of 2021 alone.

The company added that it invested more than $13 billion in safety and security teams between 2016 and October 2021, with over 40,000 people dedicated to moderation or “more than the size of the FBI.” But as the committee noted, “that investment represented approximately 1 percent of the company’s market value at the time.”

Nimmo, who was directly targeted with disinformation when 13,000 Russian bots declared him dead in a 2017 hoax, says the community of online defenders has come a long way, adding that he no longer feels as though he is “screaming into the wilderness.”

“These networks are getting caught earlier and earlier. And that’s because we have more and more eyes in more and more places. If you look back to 2016, there really wasn’t a defender community. The guys playing offense were the only ones on the field. That’s no longer the case.”