Educational use of AI expanding

FOX News’ Eben Brown reports on artificial intelligence becoming more common for students, both in and out of the classroom.

Current fail-safe measures designed to ensure material generated by artificial intelligence (AI) is clearly labeled does not meet an appropriate standard and may not be possible with current technology, an expert warns.

“There’s no interest, and it’s difficult to do,” Michael Wilkowski, chief technology officer of AI-driven bank compliance platform Silent Eight, told Fox News Digital, stressing that, in his view, it’s “actually nearly impossible to discover” if something was AI-generated or not.

The current method of applying a watermark at first glance appears more advanced than the traditional method, which would apply a physical mark over the material to make it clear and obvious that the watermark exists. Instead, AI-generated material has an embedded code.

AI companies have championed the digital watermark as a means of combating concerns that AI-generated images and videos will end up blurring the line between authentic and generated content, with everyone from OpenAI to Meta pledging to work on the technology, Wired magazine reported.

COPYRIGHT BOARD MAKES DECISION ON AWARD-WINNING IMAGE

But studies from multiple American universities have found it not only possible but relatively accessible for users to remove or “break” the watermarks. Researchers from the University of California, Santa Barbara, and Carnegie Mellon in August found the watermarks “provably removable” – not just specific examples but any example they came across.

“Our theoretical analysis proved that the proposed regeneration attack is able to remove any invisible watermark from images and make the watermark undetectable by any detection algorithm,” the study concluded.

A watermark simulation is shown on a counterfeit $100 bill at the U.S. Secret Service in Washington, D.C., on March 12, 2014. (Andrew Harrer / Bloomberg via Getty Images)

The “regeneration attack,” as indicated by the name, works by introducing “noise” to the image – effectively destroying part of the image with the watermark on it – and then reconstructing the image. The study determined that this approach was “flexible and can be instantiated with many existing image-denoising algorithms and pre-trained generative models.”

The University of Maryland in September conducted its own study and on the same process, determining that “we don’t have any reliable watermarking at this point” and saying that the team “broke all of them.”

WHAT IS ARTIFICIAL INTELLIGENCE (AI)?

Not only did the Maryland team demonstrate an ability to remove the watermark, it also showed how they could “spoof” or trick the detectors as well, showing multiple failures on various aspects of the process to render it faulty at best.

“If someone has the technology … the problem is a bad actor can use exactly the same tools or algorithm[s],” Wilkowski said, noting that many of the models and AI tools end up “widely available” to use.

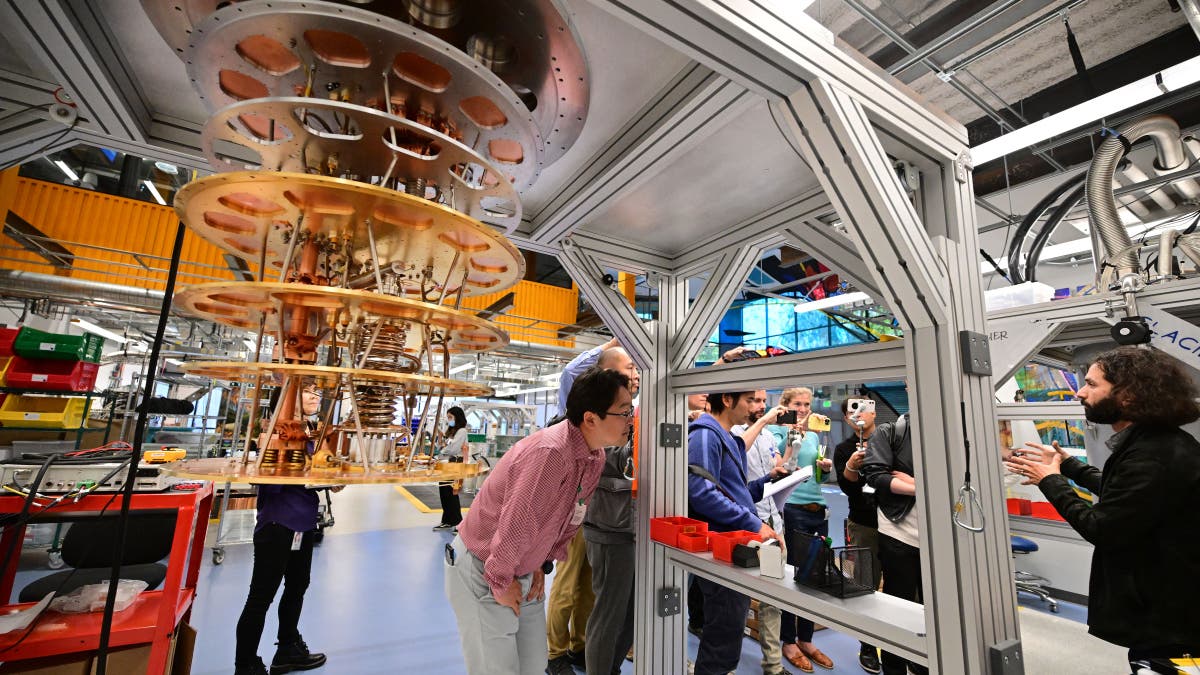

In Google’s Quantum AI laboratory, people work among the tech-firm-typical climbing walls and electric bikes to shape the next generation of computers. (Frederic J. Brown / AFP via Getty Images / File)

“If anyone can implement the tools to verify [the watermark], the bad actors can actually build tools to remove the watermarks,” he added, saying that algorithm-based models of applying or detecting watermarks are especially vulnerable since they simply need “another algorithm” to counteract it.

That’s only to note the difficulty in discerning whether visual media is AI-generated. When it comes to text or even something as complex as trade transactions, the process does not exist, according to Wilkowski.

PAPER REVEALS SECRET TO TURNING TABLES ON AI BOTS’ MINDS

His company can only determine AI-generated text material through examination to determine possible sources for the material since AI-generated material is not original but based on already existing material to train the models.

One of the issues that security firms face is that as the methods of detection improve, so do the methods of avoiding detection. If a mechanism creates a certain threshold to determine whether to trigger an investigation into suspicious transactions and trade activity, bad actors would simply find a way to conduct their business just below the threshold.

This view shows the trading floor during TKO Group Holdings’ listing at the New York Stock Exchange on Sept. 12, 2023, in New York City. (Michelle Farsi / Zuffa LLC)

Spanish police and Interpol over the past year have dismantled a betting ring that manipulated sports betting by hijacking the satellite signal from sports arenas to observe what was going on before even the bookies could see and adjust their odds. Authorities only realized something was amiss after noticing some unusually large bets around a pingpong tournament.

CLICK HERE TO GET THE FOX NEWS APP

“We become more and more clever every day in discovering that it’s more sophisticated than it was 10 years ago,” Wilkowski said.

“It’s about discovering irregularities; it’s about discovering that someone made a wire transfer in the middle of the night or about someone doing wire transfers from a completely different place in the world while, actually, a few minutes ago they were in a completely different position,” he added. “It’s all about big data processing … it’s very sophisticated.”